Toxic Behavior Detector

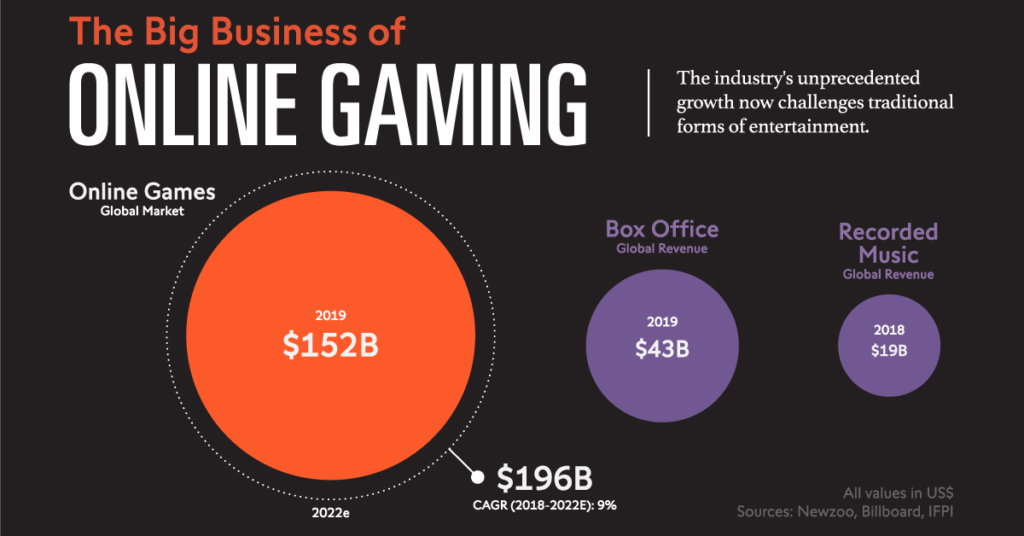

Berkeley, CA — This fall, a group of UC Berkeley students partnered with GGWP, an online gaming startup, created an online toxic behavior detector tool. This tool allows game developers to easily embed into their systems, a machine learning model that enables real-time detection of toxic messages. Addressing toxic behaviors in online gaming is an essential issue in an especially huge and growing industry, one that grossed 152 billion dollars in global revenue in just 2019. This figure is larger than both the global revenue of box office and recorded music combined. According to the Newzoo 2020 Global Games Market Report, the industry is expected to have a cumulative annual growth rate of 9.4 percent until 2023.

“There is not a tool like this for smaller game companies or developers, whose main goal is typically to create a functional and fun game,” said Ed Henrich, a startup advisor and lecturer at UC Berkeley’s Sutardja Center for Entrepreneurship and Technology. “Big companies [like Ubisoft and Activision] most likely have a team dedicated to tackling toxic behaviors in their products. Smaller companies probably do not have that level of resources while having to worry about other issues related to their games, which makes this algorithm a valuable tool in helping them reach their goals faster.”

For many players, especially kids between the ages of 8 and 17, online gaming serves as an escape from their daily mundane lives and a means of integrating into a larger community. The ability to communicate with other players through the chat system is a fundamental component of these online games. Everyone’s online gaming experience varies, but they often share one common experience: harassment.

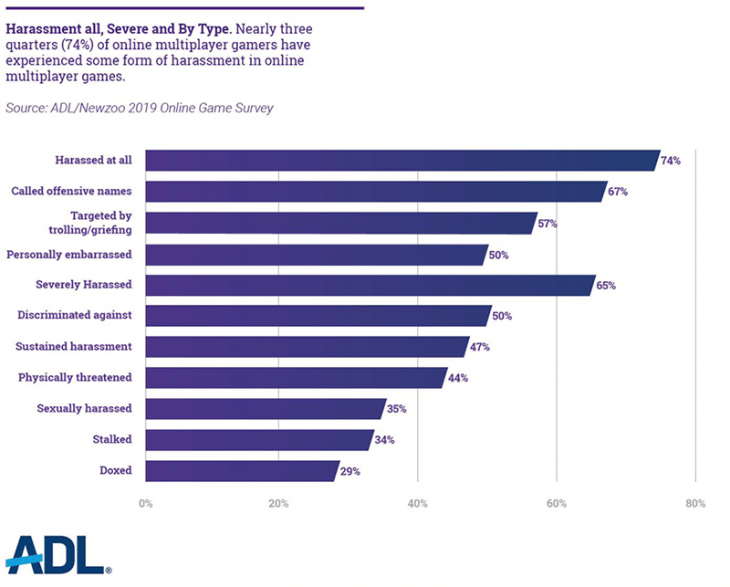

Nearly 74% of online gamers have experienced some form of harassment as reported by the ADL/Newzoo 2019 Online Game Survey. Thus, online bullies and trolls not only spoil the gaming experience for everyone, but they can also expose kids to potential abuse and trauma.

Utilizing various Natural Language Processing methods, the group of UC Berkeley students developed a model that takes into account various game-specific attributes to create a response score. This can in turn be used to dictate the game moderator’s retroactive response. In real-time, the toxicity instigator will be prevented from sending the message, and given an appropriate warning based on the severity of their message. Aside from that, moderators will be given access to a generated report of the types of players in their games, both toxic and non-toxic casual players. This will help inform decision-making on their part. During their demo, the team unveiled two options available for gaming companies – a client-side or a server-side toxicity detection system, customized to their unique gaming environments.

About Us

Team members Dheeraj Khandelwal, Eric Wang, Jacqueline Hong, Kevin Tran, and Sven Wu are all upperclassmen at UC Berkeley. They are dedicated to utilizing their experience and knowledge in data science to tackle the most essential issues in gaming today to create a safer online experience and community for gamers of all ages. This project is supported by UC Berkeley’s Sutardja Center for Entrepreneurship and Technology and its Data-X course.

Press Contact:

Kevin Tran

Sven Wu

Public GitHub:

https://github.com/dj-khandelwal/GGWP-Toxic